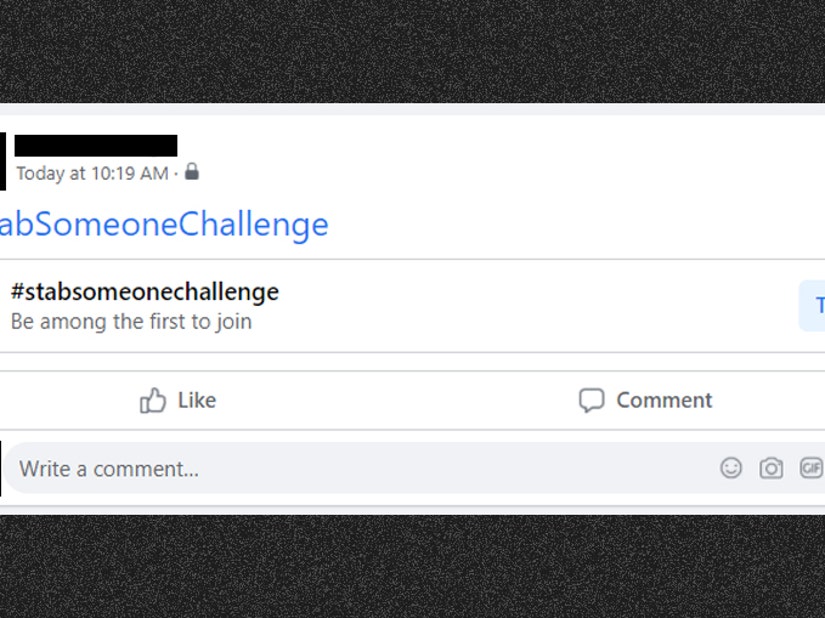

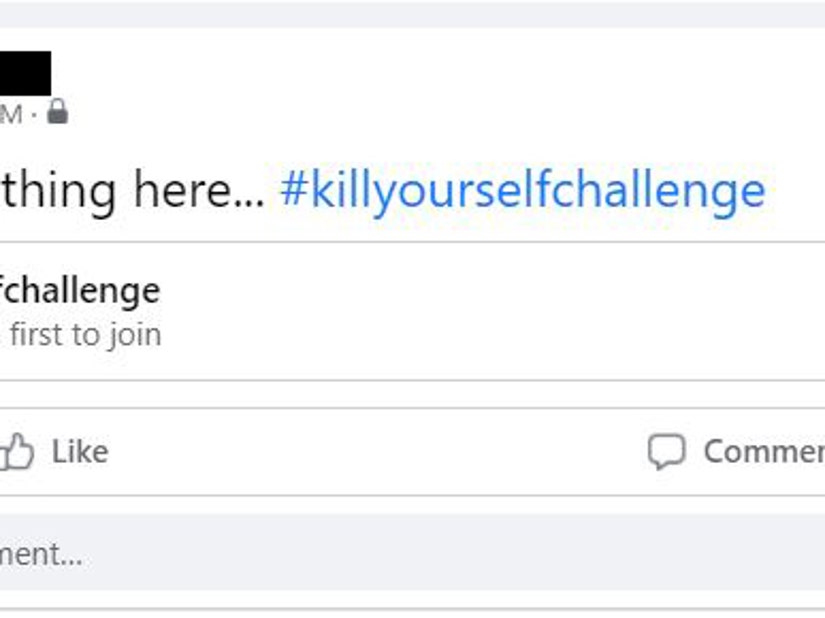

Example of what Facebook allowed to be published

Example of what Facebook allowed to be published

After being flagged by TooFab, the company is trying to figure out how its Community Standards were allowed to be automatically violated.

Update 2/16/2021 12:42 PM

Facebook has confirmed it has temporarily limited the feature, which is being internally investigated.

"We have removed this challenge and are limiting this feature while we investigate this issue," a company spokesperson told TooFab.

Instead of allowing any words — such as rape, murder, suicide — to be automatically garnished with a trophy sticker, a "try it" button and a "Be among the first to join" prompt, each #Challenge will now undergo human review first.

The company has also temporarily removed the #Challenge auto-generation feature while it investigates how its Community Standards were allowed to be automatically violated in the first place.

original story 2/15/2021 11:21 AM

Facebook has quietly removed its #Challenge feature, without ever acknowledging how irresponsible it was.

The function was rolled out late last year; but was immediately disabled last Friday just hours after a query from TooFab... a query that Facebook had still not responded to at the time.

The feature automatically detected when a user posted a hashtag with the word "challenge", prompting other users to take part in the challenge themselves.

Facebook

Facebook

Yep, Someone Just Tried to Copy the 'Gorilla Glue Girl'

View StoryHowever, there was absolutely no safeguard whatsoever for the language used in posts... meaning people could create — for example — the #StabSomeoneChallenge, or even the #KillYourselfChallenge.

Using the hashtag auto-generated a little trophy sticker, and the prompt "Be among the first to join."

More concerning still, it also auto-generated a "Try It" button, which copies the unsettling hashtags and pasted them to your timeline, encouraging users to take part and spread it. Alternatively, the challenger could nominate friends to take part by tagging them.

Rape, murder, suicide, treason — no words were off limits.

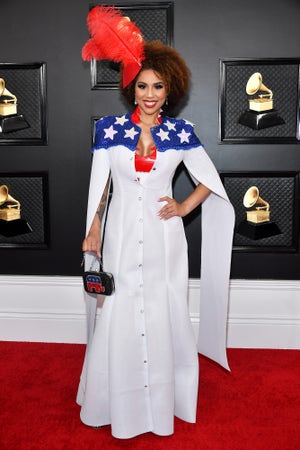

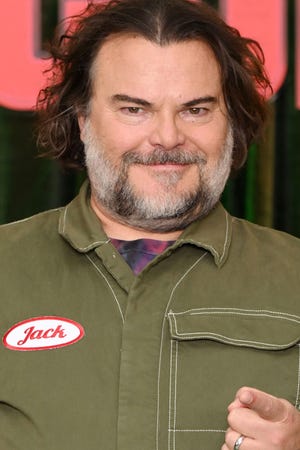

Example of what Facebook allowed to be published

Example of what Facebook allowed to be published

The feature appears to have been introduced on the back of the popularity of issuing challenges on rival TikTok, usually dance or meme-based.

However many notorious "challenges" spread on social media have already resulted in serious injury and even death, including the Oklahoma teen who died after reportedly taking part in the "Benadryl Challenge", which dared participants to chug enough of the medicine to hallucinate.

It followed the "skull-breaker challenge" (two people sweeping an unwitting third person's legs from beneath them mid jump); the "outlet challenge" (touching a coin against the exposed prongs on a plug semi-inserted into a socket); the "Cha Cha Slide challenge" (driving ones car to the lyrical instructions); the "pass out challenge" (restricting ones own airway until unconsciousness); and many more besides.

Instagram

Instagram

18-Year-Old Rising TikTok Star Dazharia Shaffer Dies by Suicide

View StoryFacebook's #Challenge feature wasn't quite as popular as its designers hoped and didn't manage to grab hold of the TikTok bandwagon; ergo the flaw went unnoticed for months.

But when asked about it on Friday, the function was suddenly disabled. Facebook has not replied to multiple requests for comment since.

Don Heider, Executive Director of the Markkula Center for Applied Ethics at Santa Clara University, said he wasn't surprised by Facebook's shortsightedness, considering its track record.

"Did they anticipate a company could steal people's personal data and micro-target political ads towards them?" he asked. "Did they predict that foreign governments might use the platform to perpetuate falsities and manipulate public opinion? Did they anticipate when they introduced a live aspect to their platform that a terrorist would livestream a shooting of Muslims in a mosque?"

"If they did anticipate, why didn't they do something? I think Facebook has not had a great track record in trying to anticipate the harm that can come from their product, and then aggressively taking steps to mitigate that potential harm."

Part of what the Ethics Center does is help companies predict what the worst imaginable use of a new technology could be.

"What obligation does a social media platform -- any social media platform -- have for what people post on it? This has been the chronic issue with all social media platforms: When do they take responsibility for their role?"

Getty

Getty

Owner of 'Jewish Space Laser' Hits Back at Marjorie Taylor Greene Claim it Started California Wildfire

View Story"Just because they have found legal immunity through a fairly big loophole in the law, that doesn't mean they don't have moral responsibility, they don't have ethical responsibility," he said. "And honestly, more and more people are wondering whether they should have legal responsibility as well."

He said one of the most upsetting things about social media companies was the lack of quick response when something bad does happen.

"We're trying to get them to anticipate the problems before they happen, so they can't be subverted in some horrible way, or used in some way that's really going to hurt people," he said. "But in the case of social media companies, they don't seem to be doing any of this... even when something bad happens, their response is 'We're not responsible.'"

"I think there are human beings working at Facebook who care deeply. But the question is: does the CEO care? Do the board of directors care? Do the people at the highest level of the company care? And the answer of course is, if they did, things would change."

"Negative publicity and scandals don't seem to hurt their bottom line. They seem a bit impervious to what might destroy other companies. And I think that's dangerous because it makes you more cavalier."

Reddit

Reddit

Four Girls Aged 12-14 Arrested After 15-Year-Old is Stabbed to Death in Fight in Louisiana Walmart

View StoryHe pointed to an example of a lettuce distribution company; if a batch was found to cause salmonella, it would do everything in its power to immediately get it off the shelves.

"The thing that's crazy about social media is we've seen strong evidence that they have been complicit in a number of really horrible and harmful situations, and yet their stock price continues to rise."

He agreed with Apple CEO Tim Cook predicting more government regulation of social media.

"Facebook is a young company — and it's acting like a young company, in terms of how it thinks about itself, and its responsibility in the world. I think a lot of people are saying it's time for that particular company — and all the social media companies — to think much more seriously about what effect they are having in the world."

The fallout from the Capitol takeover, he said, appeared to mark the moment some social media companies at least finally took action; the biggest development from this, of course, being the lifetime ban from Twitter of former President Donald Trump.

If you or someone you know is struggling with depression or has had thoughts of harming themselves or taking their own life, get help. The National Suicide Prevention Lifeline (1-800-273-8255) provides 24/7, free, confidential support for people in distress.

U.S. Department of Justice

U.S. Department of Justice